This is a murder mystery in reverse: you know who died but don’t know the circumstances. Over the course of the plot, you find out more about the situation from various family members’ points of view. Normally, I find this structure annoying but it works for this novel. By the end of the book, you aren’t even certain who did what to whom. The action takes place mostly around Capri on the Italian coast in some pretty fancy digs, and the family members are all scions of wealth, or so it seems. I liked how things were tied up by the end which I can’t go into more details, the characters were all fascinating studies of family dysfunction and seemed very realistically drawn. Highly recommended.

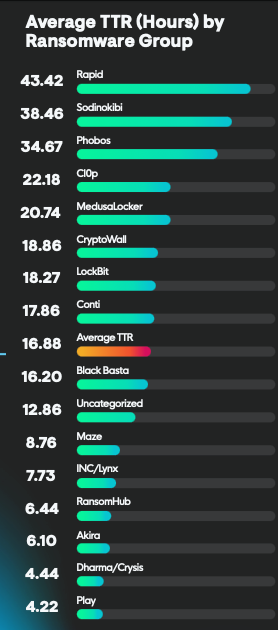

CSOonline: Attack time frames are shrinking rapidly

Times are tough for cyber pros, quite literally. Two common malware time scale metrics — dwell time and time to exploit — are rapidly shortening, making it harder for defenders to find and neutralize threats. With attackers spending far less time hidden in systems, organizations must break down security silos and increase cross-tool integration to accelerate detection and response. I explain the reasons why these two metrics are shortening and what security managers can do to keep up with the bad guys in my latest post for CSOonline.

Beware of evil twin misinformation websites

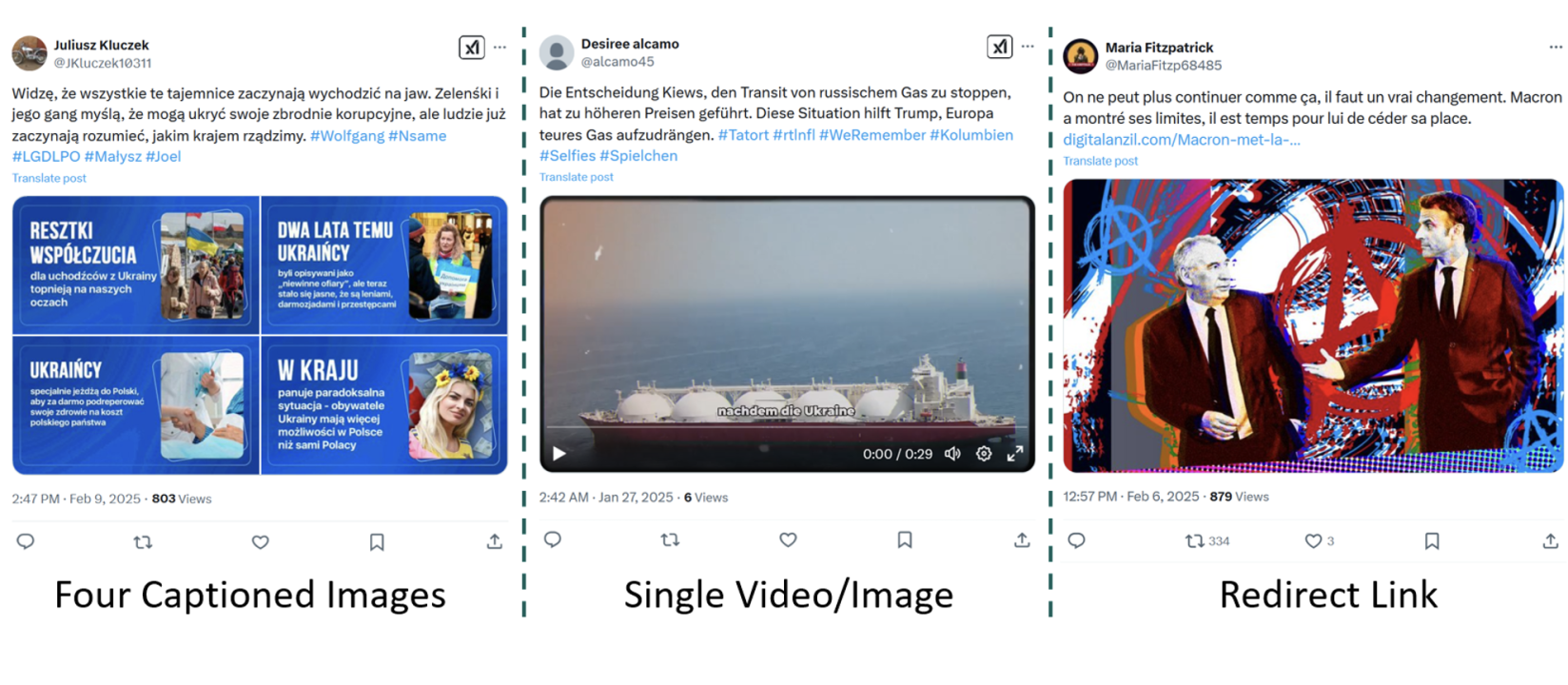

Among the confusion over whether the US government is actively working to prevent Russian cyberthreats comes a new present from the folks that brought you the Doppelganger attacks of last year. There are at least two criminal gangs involved, Struktura and Social Design Agency. As you might guess, these have Russian state-sponsored origins. Sadly, they are back in business, after being brought down by the US DoJ last year, back when we were more clear-headed about stopping Russian cybercriminals.

Doppelganger got its name because the attack combines a collection of tools to fool visitors into thinking they are browsing the legit website when they are looking at a malware-laced trap. These tools include cybersquatting domain names (names that are close replicas of the real websites) and using various cloaking services to post on discussion boards along with bot-net driven social media profiles, AI-generated videos and paid banner ads to amplify their content and reach. The targets are news-oriented sites and the goal is to gain your trust and steal your money and identity. A side bonus is that they spread a variety of pro-Russian misinformation along the way.

Despite the fall 2024 takedowns, the group is once again active, this time after hiring a bunch of foreign speakers in several languages, including French, German, Polish, and Hebrew. DFRLab has this report about these activities.They show a screencap of a typical post, which often have four images with captions as their page style:

These pages are quickly generated. The researchers found sites with hundreds of them created within a few minutes, along with appending popular hashtags to amplify their reach. They found millions of views across various TikTok accounts, for example. “During our sampling period, we documented 9,184 [Twitter] accounts that posted 10,066 of these posts. Many of these accounts were banned soon after they began posting, but the campaign consistently replaces them with new accounts.” Therein lies the challenge: this group is very good at keeping up with the blockers.

The EU has been tracking Doppleganger but hasn’t yet updated its otherwise excellent page here with these latest multi-lingual developments.

The Doppelganger group’s fraud pattern is a bit different from other misinformation campaigns that I have written about previously, such as fake hyperlocal news sites that are primarily aimed at ad click fraud. My 2020 column for Avast has tips on how you can spot these fakers. And remember back in the day when Facebook actually cared about “inauthentic behavior”? One of Meta’s reports found these campaigns linked to Wagner group, Russia’s no-longer favorite mercenaries.

It seems so quaint viewed in today’s light, where the job of content moderator — and apparently government cyber defenders — have gone the way of the digital dustbin.

Red Cross: Helping victims of an apartment fire in Little Rock on New Year’s Eve

The afternoon of the last day of 2024 saw a fire break out in the Midtown Park apartment building in Little Rock. And while confined to a single seven-story building, this like so many other fire incidents show the powerful role that the American Red Cross continues to play. Certainly, the fires surrounding Los Angeles continue to gather news attention, but this one building is a microcosm of how the Red Cross can focus on the various resources to help people move on with their lives and get the needed assistance.

The building had 127 occupied apartments: eleven residents were taken to the hospital for treatment, four of whom had critical injuries. Sadly, there was one fatality. The Red Cross was quickly on the scene, establishing a shelter at a nearby church with more than 40 volunteer nurses. You can read more on the Red Cross blog about what happened.

Book review — Innovation in Isolation: The story of Ukrainian IT from 1940s to today

This well-researched book chronicles the unsung history of computing told from a unique perspective of what went on in the former Soviet Union, particularly the pace of innovations in Ukraine. There are period photographs of both the people and the machines that were built. Most of us in the west are familiar with this story told from our perspective, but there was a parallel path that was happening back when Alan Turing, John Von Neumann and Grace Hopper were active in the US and UK.

The names Sergey Lebedev and Victor Glushkov may not mean anything to most of us, but both were the key developers of electronic computers in the early days in Ukraine. When room-sized computers were being built in numerous labs in the US and UK, the other side of the Iron Curtain had a lot of challenges. For one thing, these scientists couldn’t read Western journals that described their operation without special permission from Communist Party elders. Getting parts was an issue too. And the party continued to not understand the steps needed to construct the machine, and continually blocked the scientists’ efforts.

The names Sergey Lebedev and Victor Glushkov may not mean anything to most of us, but both were the key developers of electronic computers in the early days in Ukraine. When room-sized computers were being built in numerous labs in the US and UK, the other side of the Iron Curtain had a lot of challenges. For one thing, these scientists couldn’t read Western journals that described their operation without special permission from Communist Party elders. Getting parts was an issue too. And the party continued to not understand the steps needed to construct the machine, and continually blocked the scientists’ efforts.

Lebedev lead a team of researchers outside of Kyiv in the early 1950s to build the first digital computer that had a 5 kHz CPU that performed 3,000 operations per second. Nevertheless, it could solve linear equations of 400 unknowns. He was transferred to Moscow where over the course of his career built more than a dozen computers. This is where Glushkov came into play to help direct these projects both in Moscow and Kyiv, including the first computers based on transistors (and later using integrated circuits) and not the temperamental vacuum tubes. (One of which, the MNP3, is shown above.) Part of the challenges these projects faced were the top-down Soviet system of central planning committees running seemingly endless equipment performance reviews and testing. One of those early machines were used in utilitarian projects such as designing synthetic rubber manufacturing processes, building railroad tracks, and controlling chemical processes. Glushkov was also instrumental in the development of the Soviet internet, which faced a difficult birth thanks to Soviet bureaucracy.

Another chapter documents the rise of the personal computer. The Soviet versions, called the Specialist-85 and ZX Spectrum computers, were developed in the 1980s in Ukraine. Both were widely available as a hobbyist’s construction kit from department stores. The former ran BASIC on cloned Intel-like CPUs. The latter machine was built with its own CPU designed in Ukraine and was a gaming machine which looked a lot like the Sinclair models of that era. As PCs became popular around the world, the Soviet factories began offering their own IBM PC clones running their own versions of DOS and CP/M operating systems such as the Neuron and the Poisk. Many of these machines were built in Ukrainian factories. That all changed in 1994, when imported Western-built PCs were sold in the Soviet Union.

The second half of the book documents eight computer companies who have flourished in the modern era, including Grammarly, MacPaw – who sells the CleanMyMac utility and one of the sponsors of the book, Readdle – who was early to the mobile app marketplace, and several others.

The book is a fascinating look at a part of history that I wasn’t familiar with, even though I have been involved in the early days of PCs and tech since the 1980s.

The case for saving disappearing government data

With every change in federal political representation comes the potential for data loss collected by the previous administration. But what we are seeing now is wholesale “delete now, ask questions later” thanks to various White House executive orders and over-eager private institutions rushing to comply. This is folly, and I’ll explain my history with data-driven policymaking that goes back to the late 1970s, with my first post-graduate job working in Washington DC for a consulting firm.

The firm was hired by the Department of the Interior to build an economic model that compared the benefit of the Tellico Dam, under construction in Tennessee, with the benefit of saving a small fish that was endangered by its eventual operation called the snail darter. At the time we were engaged by the department of the Interior, the dam was mostly built but hadn’t yet started flooding its reservoir. Our model showed more benefit of the dam than from the fish, and was part of a protracted debate within Congress over what to do about finishing the project. Eventually, the dam was finished and the fish was transplanted to another river, but not before the Supreme Court and several votes were cast.

In graduate school, I was trained to build these mathematical models and to get more involved in how to support data-driven policies. Eventually, I would work for Congress itself, a small agency called the Office of Technology Assessment. That link will take you to two reports that I helped write on various issues concerning electric utilities. OTA was a curious federal agency that was designed from the get-go to be bicameral and bipartisan to help craft better data-driven policies. The archive of reports is said to be “the best nonpartisan, objective and thorough analysis of the scientific and technical policy issues” of that era. An era that we can only see receding in the rear-view mirror.

OTA eventually was caught in political crossfire and was eliminated in the 1990s during the Reagan administration. Its removal might remind you of other agencies that are on their own endangered species list.

I mention this historical footnote as a foundation to talk about what is happening today. The notion of data-driven policies may be thought of as harking back to when buggy-whips existed. But what is going on now is much more than eliminating people who work in this capacity across our government. It is deleting data that was bought and paid for by taxpayers, data that is unique and often not available elsewhere, data that represents historical trends and can be useful to analyze whether policies are effective. This data is used by many outside of the federal agencies that collected them, such as figuring out where the next hurricane will hit and whether levees are built high enough.

Here are a few examples of recently disappearing databases:

- The National Law Enforcement Accountability Database, which documents law enforcement misconduct, launched in 2018. It is no longer active and is being decommissioned by the DOJ.

- The Pregnancy Risk Assessment Monitoring System, which identifies women who have high-risk health problems, was launched in 1987 by the Centers for Disease Control. Historical data appears to be intact. Another CDC database, the Youth Risk Behavior Surveillance System, was taken down but was ordered by courts to be restored.

- The Climate and Economic Justice Screening Tool was created in 2022 to track communities’ experiences with climate and other environmental programs. It was removed from the White House website and was partially restored on a GitHub server. One researcher said to a reporter, “It wasn’t just scientists that relied on these datasets. It was policymakers, municipal leaders, stakeholders and the community groups themselves trying to advocate for improved, lived experiences.”

Now, whether you agree with the policies that created these databases, you probably would agree that the taxpayer-funded investment of historical data should at least be preserved. As I said earlier, any change of federal administration has been followed by data loss. This has been documented by Tara Calishain here. She tells me what is different this time is that the number of imperiled data is more numerous and that more people are now paying attention, doing more reporting on the situation.

There have been a number of private sector entities that have stepped up to save these data collections, including the Data Rescue Project, Data Curation Project, Research Data Access and Preservation Association and others. Many began several years ago and are sponsored by academic libraries and other research organizations, and all rely on volunteers to curate and collect the data. One such “rescue event” happened last week at Washington University here in St. Louis. The data that is being copied is arcane, such as from instruments that track purpose-driven small earthquakes deep underground in a French laboratory or collecting crop utilization data.

I feel this is a great travesty, and I identify with these data rescue efforts personally. As someone who has seen my own work disappear because of a publication going dark or just changing the way they archive my stories, it is a constant effort on my part to preserve my own small corpus of business tech information that I have written for decades. (I am talking about you IDG. Sigh.) And it isn’t just author hubris that motivates me: once upon a time, I had a consulting job working for a patent lawyer. He found something that I wrote in the mid-1990s, after acquiring tech on eBay that could have bearing on their case. They flew me to try to show how it worked. But like so many things in tech, the hardware was useless without the underlying software, which was lost to the sands of time.

Don’t fall for this pig butchering scam

A friend of mine recently fell victim to what is now called pig butchering. Jane, as I will call her, lives in St. Louis. She is a well-educated woman with multiple degrees and decades of management experience. But Jane is also out more than $30,000 and has had her life upended as a result of this experience, having to change bank accounts, email addresses and obtain a new phone number..

The term refers to a complex cybercrime operation that has at its heart the ability to control the victim and compel them to withdraw cash from their bank account and send it via bitcoin to the scammer. The reason why this scam works is because the victim is taking money from their account. The various fraud laws don’t cover you making this mistake. I will explain the details in a moment.

Many of us are familiar with the typical ransomware attacks, where the criminals receive the funds directly from their victims: these transactions might be anonymous but they are reversible. So let’s back up for a moment and track Jane’s actions leading up to the scam.

In Jane’s situation, the attack began when her computer received a warning message that it had been hacked and for her to call this phone number to disinfect it. Somehow, this malware was transmitted, typically via a phishing email. This is the weak point of the scam. Every day I get suspicious emails — most are caught by the spam filters, but occasionally things break through. As I was helping Jane get her life back on track, my inbox was flooded with email confirmations of an upcoming stay at a hotel. At one point, I think I had a dozen such “confirmations.” Perhaps the guest made a legitimate mistake and used my email address — but more likely, as these emails piled up, this was an attempted phishing scam.

Anyway, back to Jane. She called the number and the attacker proceeded to convince her that she was the victim of a scammer — which ironically was true at the time, and probably the first and last thing he said that was true. Her computer was infected with all sorts of child porn, and she could be legally liable. She believed the scammer, and over the course of several hours, stayed on the phone with him as she got in her car, drove to her bank and withdrew her cash.

Now, in the cold light of a different day, Jane understands her mistake. “I was a lawyer. I should have recognized this was all a fabrication,” she told me, rather abashedly. “I should have known better but I was caught up in the high emotional drama at that moment and wasn’t thinking clearly.” Eventually, her attacker directed her to a bitcoin “ATM” where she could feed in her $100 bills and turn it into electrons of cybercurrency. Her attacker had thoughtfully sent her a QR code that contained his address. Think about that — she is standing in a convenience store, feeding $100 bills into this machine. That takes time. That takes determination.

Jane is computer literate, but doesn’t bank online. She manages her investments the old-fashioned way: by calling her advisors or visiting them in person. She has a cellphone and a computer, and while I was helping her get her digital life back in order we were remembering where we were when we first used email many decades ago and how new and shiny it was before scammers roamed the interwebs.

So how did the scam unravel? After spending all afternoon on the phone, the scammer got greedy and wanted more fat on the pig, so to speak. She called him back on her special hotline number and he asked her to withdraw more money from her bank account. She went back to her bank, and fortunately got the same teller that she had the day before. He questioned her withdrawal and that brought the butcher shop operation to a halt when she revealed that she was being directed by the scammer.

But now comes the aftermath, the digital cleanup in Aisle 7. And that will take time, and effort on Jane’s part to ensure that she has appropriate security and that her contact info is sent to the right places and people. But she is still out the funds. She knows now not to get caught up in the moment just because an email or a popup message tells her something.

Avoiding pig butchering scams means paying attention when you are reading your email and texts. Don’t multitask, focus on each individual message. And when in doubt, just delete.

A review of AI Activated, a report for PR pros and others

The USC Annenberg center for public relations publishes every January its “Relevance report” and this year’s edition is mostly about AI. It is more an anthology of views from 50 corporate folks, some in PR and some in other industries that are PR-adjacent. Even if you aren’t a PR pro or a journalist, the 111 page report is worth a download and at least an hour of your time.

Here are some of their insights that I found most, uh, relevant.

The lead off piece is by Gerry Tschopp, head of Experian’s comms team. They have been using Chat GPT to speed up their responses with stockholder communications, social media analytics. “What once required hours of research and revisions is now handled in a matter of minutes” with the AI chat tools.

Many of the authors point to how AI can automate the mundane, everyday tasks such as organizing databases or formatting reports or providing other suggestions to improve the quality of first drafts. Jaimie McLaughlin, a headhunter, uses AI to enhance candidate matching for recruitment purposes. Pinterest is using AI to reorder its content feed to focus on inspirational and more positive and actionable content. Grubhub is using AI to design new ad campaigns that focus on more emotionally-charged moments, such as the changes wrought with a newborn, or creating a first draft of a press release in a matter of seconds. Microsoft (who as a corporate sponsor has several contributions) has redesigned its transcription workflow of interviews using AI, as shown here. And Edelman PR is using AI to be more proactive at client reputation management and in improving trust on specific business outcomes. This was echoed by another PR pro that went into specifics, such as using AI to detect and analyze situations that could turn into a full-blown crisis by automating data collection in real-time, tracking the evolution of any issues as they unfold. AI can do sentiment analysis from this data, something that used to be fairly tedious manual work.

Many of the authors point to how AI can automate the mundane, everyday tasks such as organizing databases or formatting reports or providing other suggestions to improve the quality of first drafts. Jaimie McLaughlin, a headhunter, uses AI to enhance candidate matching for recruitment purposes. Pinterest is using AI to reorder its content feed to focus on inspirational and more positive and actionable content. Grubhub is using AI to design new ad campaigns that focus on more emotionally-charged moments, such as the changes wrought with a newborn, or creating a first draft of a press release in a matter of seconds. Microsoft (who as a corporate sponsor has several contributions) has redesigned its transcription workflow of interviews using AI, as shown here. And Edelman PR is using AI to be more proactive at client reputation management and in improving trust on specific business outcomes. This was echoed by another PR pro that went into specifics, such as using AI to detect and analyze situations that could turn into a full-blown crisis by automating data collection in real-time, tracking the evolution of any issues as they unfold. AI can do sentiment analysis from this data, something that used to be fairly tedious manual work.

ABC News is using AI to debunk AI-generated viral videos, because they are so easily created. As one producer put it, “Here’s what keeps me up at night: It takes eight minutes and a few dollars to create a deepfake. Truth, measured in pixels and seconds, has never been more fragile.”

It is clear from these and other examples peppered throughout the report that, as Gary Brotman of Secondmind says, “AI tools have become integral to everything from automating social media monitoring and trend analysis to enhancing campaign measurement. ChatGPT has become my co-author for just about everything.” His essay contains some interesting predictions of where AI is going over the next five years, such as with hyper-personalized communications, predictive content creation and eroding knowledge silos everywhere. Yet despite these innovations, he feels that AI adoption has been slower and less impactful than many predicted because we have neglected the human element.

“The integration of AI into PR isn’t a short-term project with a finite end date — it’s an ongoing journey of innovation and refinement,” says one AI executive. And I think that is a good thing, because AI will bring out the lifelong learners to experiment and use it more. It will encourage us to think beyond the obvious, to find interesting connections in our experiences and contacts.

And there are plenty of tools to use, of course. Dataminr (newsroom workflow), Zignal Labs (real-time intel), Axios HQ (writing assistant), Glean (various AI automated assistants), and Otter.ai (transcriptions) were all mentioned in the report. I am sure there are dozens more.

In a survey conducted by Waggener Edstrom PR, the top four concerns about adopting AI tools for PR purposes included information security, factual errors and data privacy, all mentioned by almost half the respondents. That seems about right.

Sona Iliffe-Moon, the chief communications officer at Yahoo, sums things up nicely: We have to focus on the communications that matter most, use AI for scale not strategy, and put authenticity and trust but verify with humans. Trust but verify — now where did we hear those words before? Luckily, we have chatbots and Wikipedia to help out.

CSOonline: A buyers guide for SIEM products

Security information and event management software (SIEM) products have been an enduring part of enterprise software ever since the category was created back in 2005 by a couple of Gartner analysts. It is an umbrella term that defines a way to manage the deluge of event log data to help monitor an enterprise’s security posture and be an early warning of compromised or misbehaving applications. It grew out of a culture of log management tools that have been around for decades, reworked to focus on security situations. Modern SIEM products combine both on-premises and cloud log and access data along with using various API queries to help investigate security events and drive automated mitigation and incident response.

For CSOonline, I examined some of the issues for potential buyers of these tools and point out some of the major issues to differentiate them. This adds to a collection of other buyers guides of major security product categories:

Why we need more 15-minute neighborhoods

I have split my years living part of the time in suburbs and part in urban areas. This is not counting two times that I lived in the LA area, which I don’t quite know how to quantify. I have learned that I like living in what urbanist researchers (as they are called) classify as a “15-minute neighborhood” — meaning that you can walk or bike to many of the things you need for your daily life within that time frame, which works out to about a mile or so walk and perhaps a three mile bike ride. I also define my neighborhood in St. Louis as walk-to-Whole Foods and walk-to-hospital, somewhat tongue-in-cheek.

Why is this important? Several reasons. First, I don’t like being in a car. On my last residency in LA, I had a 35 mile commute, which could take anywhere from 40 minutes to hours, depending on traffic and natural accidents. At my wife’s suggestion, I turned that commute into a 27 mile car ride and got on my bike for the last (or first) leg. While that lengthened the commute, it got me to ride each day. Now my commute is going from one bedroom (the one I sleep in) to another (that I work in). Some weeks go by where I don’t even use the car.

Second, I like being able to walk to many city services, even apart from WF and the doctors. When the weather is better, I bike in Forest Park, which is about half a mile away and is a real joy for other reasons besides its road and path network.

This research paper, which came out last summer, called “A universal framework for inclusive 15-minute cities,” talks about ways to quantify things across cities and takes a deep dive into specifics. It comes with an interactive map of the world’s urban areas that I could spend a lot of time exploring. The cities are mostly red (if you live here in the States) or mostly blue (if you live in Europe and a few other places). The colors aren’t an indication of political bent but how close to that 15-minute ideal most of the neighborhoods that make up the city are. Here is a screencap of the Long Island neighborhood that I spent many years living in: the area shown includes both my home and office locations, and for the most part is a typical suburban slice.

The cells (which in this view are the walkable area from a center point) are mostly red in that area. Many commuters who worked in the city would take issue with the scores in this part of Long Island, which has one of the fastest travel times into Manhattan, and in my case, I could walk to the train within 15 or so minutes.

The paper brings up an important issue: cities to be useful and equitable have to be inclusive and have services spread across their footprints. Most don’t come close to this ideal. For the 15 minute figure to apply, you need density high enough where people don’t have to drive. The academics write, “the very notion of the 15-minute city can not be a one-size-fits-all solution and is not a viable option in areas with a too-low density and a pronounced sprawl.”

Ray Delahanty makes this point in his latest video where he focuses on Hoboken, New Jersey. (You should subscribe to his videos, where he talks about other urban transportation planning issues. They have a nice mix of entertaining travelogue and acerbic wit.)

Maybe what we need aren’t just more 15-minute neighborhoods, but better distribution of city services.